QEMU Tutorial (Version 10.0.3)

@author

Jiahao Lu @ XMU [Contact: lujhcoconut@foxmail.com]@last_update

2025/07/31

1. What is QEMU

QEMU is an open-source system emulator and user-mode emulator.

It can emulate various hardware platforms, such as x86, ARM, RISC-V, and PowerPC. Its main capabilities include:

Virtualizing operating systems (e.g. running Linux or Windows)

Emulating hardware components such as CPU, memory, and I/O devices

Running programs across different architectures (e.g. executing ARM programs on an x86 host)

Collaborating with KVM to support hardware-accelerated virtualization (only when the host and guest systems share the same architecture, such as x86)

In full-system emulation mode (qemu-system-*), QEMU acts as a virtual machine monitor, simulating a complete system environment that includes:

CPU (e.g., x86, ARM)

BIOS or UEFI firmware

Bootloaders (e.g., GRUB)

Operating systems (e.g., Linux, Windows)

Storage devices (HDD/SSD), network interfaces, USB, and more

The emulated operating system runs independently of the host system, as if it were running on actual hardware provided by QEMU.

QEMU’s memory emulation is both fundamental and intricate, primarily reflected in the following aspects:

(i) Guest Physical Memory Emulation

QEMU creates a virtual physical memory region for the guest system (e.g., 512MB, 2GB).

This memory is allocated from a contiguous address space on the host and then mapped to the guest.

Users can specify the memory size using the

-mparameter.

qemu-system-x86_64 -m 2G -hda disk.img(ii) Memory Mapping and Paging Mechanisms

The guest operating system continues to use its own virtual memory management, including structures like page tables and the TLB (Translation Lookaside Buffer).

QEMU emulates CPU memory accesses, which involves handling page table walks, TLB caching, and page faults.

Special APIs such as

MemoryRegionand address space abstractions are used to support device register mappings, DMA access, and other hardware-level memory operations.

(iii) QEMU supports advanced features including:

NUMA topology: enabling distributed memory across multiple nodes

HugePages: improving memory access performance through large page mappings

Shared memory between multiple VMs: supported via mechanisms such as

vhost-userandivshmem

(iv) Host Memory Management and Security

QEMU allocates memory using system calls such as

mmap().Memory protection flags (e.g.,

MAP_NORESERVE,MAP_SHARED_VALIDATE) can be leveraged to optimize security and performance.DMA operations through device models like virtio also rely heavily on proper memory management.

Instance

xxxxxxxxxxqemu-system-x86_64 \ -m 2048 \ -kernel bzImage \ -append "root=/dev/sda1 console=ttyS0" \ -hda rootfs.img \ -nographic-m 2048: Allocates 2GB of memory to the guest machine.

-kernel bzImage: Specifies the Linux kernel image to boot.

-hda: Sets the hard disk image (typically the root filesystem).

-nographic: Uses the terminal instead of a graphical interface (console output is redirected to the terminal).

2. Quick Start

download

xxxxxxxxxxwget https://download.qemu.org/qemu-10.0.3.tar.xztar xvJf qemu-10.0.3.tar.xzcd qemu-10.0.3glib local env

xxxxxxxxxxmkdir glib_localcd glib_localwget https://download.gnome.org/sources/glib/2.70/glib-2.70.0.tar.xztar xf glib-2.70.0.tar.xzcd glib-2.70.0mkdir buildcd buildmeson --prefix=/home/dell/jhlu/learning/qemu_study/qemu-10.0.3/glib-local ..ninjasudo ninja installwrite a bash build_qemu.sh- x

export PKG_CONFIG_PATH="$(pwd)/glib-local/lib/x86_64-linux-gnu/pkgconfig:$PKG_CONFIG_PATH"export LD_LIBRARY_PATH="$(pwd)/glib-local/lib/x86_64-linux-gnu:$LD_LIBRARY_PATH"export PATH="$(pwd)/glib-local/bin:$PATH"echo "Using pkg-config from: $(which pkg-config)"echo "glib-2.0 version detected by pkg-config:"pkg-config --modversion glib-2.0./configure "$@"

build

xxxxxxxxxxninja -C build./build_qemu.shtest

xxxxxxxxxxls build/qemu-system-*./build/qemu-system-x86_64 --versionqemu start

xxxxxxxxxxqemu-system-x86_64 -kernel /home/dell/jhlu/colloid/tpp/linux-6.3/arch/x86/boot/bzImage -initrd /home/dell/jhlu/learning/qemu_study/initramfs.gz -nographic -append "console=ttyS0 init=/init"

3. Memory Management in QEMU

3.1 Detailed Explanation of Command-Line Parameters

xxxxxxxxxxqemu-system-x86_64 -m size=2048,slots=4,maxmem=8192size: Initial base memory size for the guest at boot time (unit: MB)

slots: Number of configurable memory slots (i.e., DIMM count) (can be used for hot-plugging)

maxmem: Maximum total memory supported by the guest (base memory + hot-pluggable memory)

Related

BusyBox

BusyBox is an extremely lightweight and versatile collection of command-line utilities. It integrates many common Unix/Linux commands—such as ls, cp, mv, sh, grep, mount, and others—into a single executable file, with each function invoked via symlinks or command-line parameters corresponding to the specific command.

Compact Size: Ideal for resource-constrained environments such as embedded systems and routers.

Comprehensive Functionality: Includes most essential Unix/Linux commands and utilities.

Flexible Configuration: Can be compiled selectively to include only the commands you need.

Single Executable: All tools are integrated into one binary file, simplifying system administration and maintenance.

Static compilation example of BusyBox

xxxxxxxxxxwget https://busybox.net/downloads/busybox-1.36.1.tar.bz2tar xjf busybox-1.36.1.tar.bz2cd busybox-1.36.1make defconfig sed -i 's/^# CONFIG_STATIC is not set$/CONFIG_STATIC=y/' .config

make -j$(nproc)make installThis will generate a statically compiled BusyBox and install it to _install/. Verify it again using the following command.

xxxxxxxxxxfile _install/bin/busyboxCorrect output should be like this

xxxxxxxxxxELF 64-bit LSB executable, x86-64, statically linked, ...Then

xxxxxxxxxxcd _installmkdir -p dev proc syssudo mknod dev/console c 5 1sudo mknod dev/null c 1 3ln -sf /bin/busybox initYou're preparing a minimal, bootable initramfs root filesystem. The main goals are:

To ensure the kernel can execute

/initas the first user-space process after boot.To enable essential I/O operations during early boot (e.g. logging, console access).

cd _installThis changes into the BusyBox install directory.

It contains the

bin/busyboxexecutable and will serve as the root of your initramfs.

mkdir -p dev proc sysCreates required mount points for virtual filesystems:/dev: holds device nodes likeconsoleandnull./proc: used to expose kernel process info viaprocfs./sys: exposes hardware and kernel information viasysfs.

These are typically mounted by the init script during early boot for kernel–user space interaction.

sudo mknod dev/console c 5 1Creates the character device

/dev/consolewith major 5, minor 1.This device is crucial for console output—without it, you won't see kernel or init messages.

sudo mknod dev/null c 1 3Creates/dev/null(major 1, minor 3), the "bit bucket".Many programs rely on

/dev/nullto discard output.Its absence can cause strange errors or crashes in user-space tools.

ln -sf /bin/busybox initCreates a symbolic link from/initto BusyBox.The Linux kernel looks for

/init(not/sbin/init) after loading initramfs.BusyBox is typically compiled statically and supports

--installto simulate a multi-call binary.Linking

/initto BusyBox ensures that it gets executed first.

xxxxxxxxxxcan't run '/etc/init.d/rcS': No such file or directory

xxxxxxxxxxmkdir -p etc/init.dcat > etc/init.d/rcS <<EOFmount -t proc none /procmount -t sysfs none /sysecho "Welcome to minimal BusyBox system"exec /bin/shEOF

chmod +x etc/init.d/rcSxxxxxxxxxxfind . | cpio -o --format=newc | gzip > ../../initramfs.gzQEMU Start

x

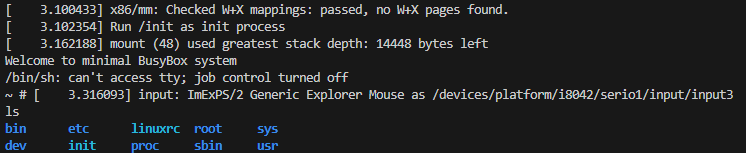

qemu-system-x86_64 -kernel /home/dell/jhlu/colloid/tpp/linux-6.3/arch/x86/boot/bzImage -initrd /home/dell/jhlu/learning/qemu_study/initramfs.gz -nographic -append "console=ttyS0 init=/init"outputs:

NUMA Support

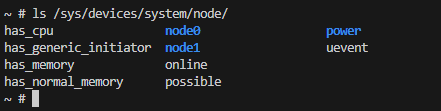

xxxxxxxxxxqemu-system-x86_64 -kernel /home/dell/jhlu/colloid/tpp/linux-6.3/arch/x86/boot/bzImage -initrd /home/dell/jhlu/learning/qemu_study/initramfs.gz -m 2G -smp 2 -numa node,mem=1024 -numa node,mem=1024 -nographic -append "root=/dev/ram console=ttyS0 rdinit=/init"outputs:

Good Habit

1. Efficiently retrieving error messages during compilation

xxxxxxxxxxmake -j$(nproc) 2>&1 | tee build.log# outputsgrep error build.log